- Maker of fastest AI chip in the world makes a splash with DeepSeek onboarding

- Cerebras says the solution will rank 57x faster than on GPUs but doesn’t mention which GPUs

- DeepSeek R1 will run on Cerebras cloud and the data will remain in the USA

Cerebras has announced that it will support DeepSeek in a not-so-surprising move, more specifically the R1 70B reasoning model. The move comes after Groq and Microsoft confirmed they would also bring the new kid of the AI block to their respective clouds. AWS and Google Cloud have yet to do so but anybody can run the open source model anywhere, even locally.

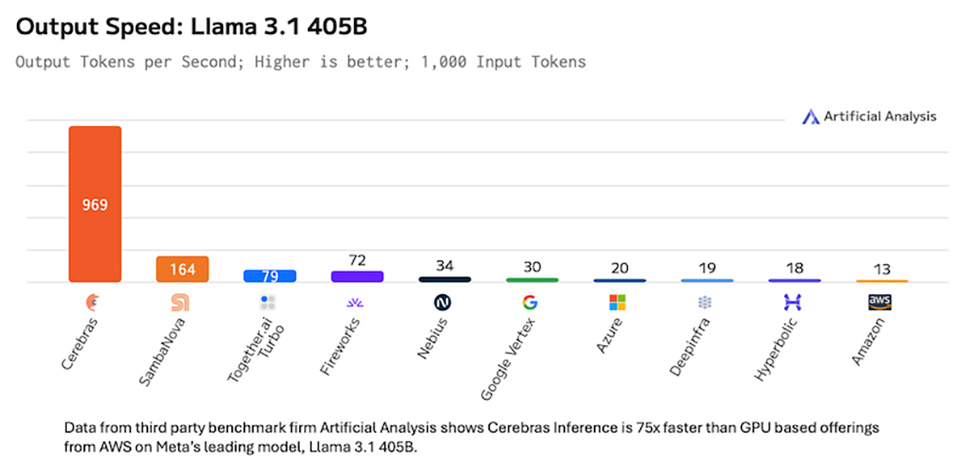

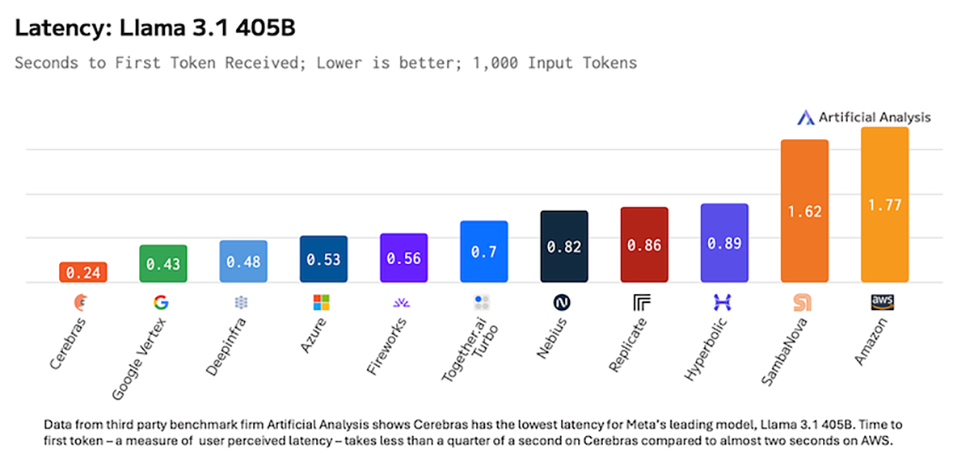

The AI inference chip specialist will run DeepSeek R1 70B at 1,600 tokens/second, which it claims is 57x faster than any R1 provider using GPUs; one can deduce that 28 tokens/second is what GPU-in-the-cloud solution (in that case DeepInfra) apparently reach. Serendipitously, Cerebras latest chip is 57x bigger than the H100. I have reached out to Cerebras to find out more about that claim.

Research by Cerebras also demonstrated that DeepSeek is more accurate than OpenAI models on a number of tests. The model will run on Cerebras hardware in US-based datacentres to assuage the privacy concerns that many experts have expressed. DeepSeek – the app – will send your data (and metadata) to China where it will most likely be stored. Nothing surprising here as almost all apps – especially free ones – capture user data for legitimate reasons.

Cerebras wafer scale solution positions it uniquely to benefit from the impending AI cloud inference boom. WSE-3, which is the fastest AI chip (or HPC accelerator) in the world, has almost one million cores and a staggering four trillion transistors. More importantly though, it has 44GB of SRAM, which is the fastest memory available, even faster than HBM found on Nvidia’s GPUs. Since WSE-3 is just one huge die, the available memory bandwith is huge, several orders of magnitude bigger than what the Nvidia H100 (and for that matter the H200) can muster.

A price war is brewing ahead of WSE-4 launch

No pricing has been disclosed yet but Cerebras, which is usually coy about that particular detail, did divulge last year that Llama 3.1 405B on Cerebras Inference would cost $6/million input tokens and $12/million output tokens. Expect DeepSeek to be available for far less.

WSE-4 is the next iteration of WSE-3 and will deliver a significant boost in the performance of DeepSeek and similar reasoning models when it is expected to launch in 2026 or 2027 (depending on market conditions).

The arrival of DeepSeek is also likely to shake the proverbial AI money tree, bringin more competition to established players like OpenAI or Anthropic, pushing prices down.

A quick look at Docsbot.ai LLM API calculator shows OpenAI is almost always the most expensive in all configurations, sometimes by several orders of magnitude.

Leave a comment